Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

Advertisement

Communications Earth & Environment volume 3, Article number: 177 (2022)

1412

10

Metrics details

Public understanding about complex issues such as climate change relies heavily on online resources. Yet the role that online instruction should assume in post-secondary science education remains contentious despite its near ubiquity during the COVID-19 pandemic. The objective here was to compare the performance of 1790 undergraduates taking either an online or face-to-face version of an introductory course on climate change. Both versions were taught by a single instructor, thus, minimizing instructor bias. Women, seniors, English language learners, and humanities majors disproportionately chose to enroll in the online version because of its ease of scheduling and accessibility. After correcting for performance-gaps among different demographic groups, the COVID-19 pandemic had no significant effect on online student performance and students in the online version scored 2% lower (on a scale of 0–100) than those in the face-to-face version, a penalty that may be a reasonable tradeoff for the ease of scheduling and accessibility that these students desire.

Support for policies that address climate change depends on an educated populace and its comprehension of difficult scientific concepts. To forestall action on climate change, the government of the United States in 2017 removed hundreds of webpages about climate change from the websites of federal agencies and departments and scrubbed the term “climate change” from thousands of others. Only four years later after a new administration took office was this censoring reversed. Also troubling is that during this period some reliable sources of information became less suitable for educational purposes; for example, the Assessment Reports of the United Nations Intergovernmental Panel on Climate Change (IPCC) grew exponentially: the reports for Working Group I about the Physical Science expanded from 414 pages in 1990 to 3949 pages in 2021, for Working Group II about Impact and Adaptation from 296 pages in 1990 to 3675 pages in 2021, and for Working Group III about Mitigation from 438 pages in 1995 to 2913 pages in 2022 (Fig. S1). To address these issues, the National Science Foundation of the United States, as part of DUE 09-50396 “Creating a Learning Community for Solutions to Climate Change”, funded establishment of a nationwide cyber-enabled learning community to develop web-based curricular resources for teaching undergraduates about climate changes. One product of this project was a multi-disciplinary, introductory online course that is freely available to the public1.

This course was pressed into broader service as schools struggled to provide online materials at the onset of the COVID-19 pandemic. Institutions of higher education received criticism for adopting such courses, largely based on the assumption that online instruction is inherently inferior to that delivered face-to-face. The issue has become whether the convenience and safety of online instruction outweighs the possibility of inferior learning outcomes for today’s undergraduates.

Although the pandemic infused topical urgency into this issue, it is hardly new. The efficacy of distance learning has been debated since the External Programme of the University of London first offered a correspondence course in 1858. Correspondence degrees have historically been driven by equity concerns for working people and women who could not access colleges2, yet they have historically been perceived as inferior to on-campus education3,4.

Online learning opportunities experienced explosive growth with the advent of widespread internet access and expanded credentialed university programs. In the United States alone, enrollments in online college courses rose from 1.6 million students in 2002 to 6.9 million students in 20185,6. During 2018, 35.3% of undergraduates in the United States took at least one course online, and half of these students took online courses exclusively6. This boom in online offerings coevolved with active learning and EdTech, and today’s online courses tend to be highly interactive, even when asynchronous or self-paced. Indeed, instructional design proponents often position today’s online courses on a spectrum with hybrid learning and flipped classrooms, rather than emphasize their ascent from didactic-style correspondence courses. Advocates for interactive online learning claim that a well-designed online course can be as effective as a face-to-face course, and perhaps even more effective than a traditional course based on passive lecture presentations7,8,9,10.

Despite the new pedagogic paradigm for today’s online courses, familiar critiques of online learning persist4. Detractors cite high attrition rates as evidence that online courses leave students vulnerable to distraction and claim that the quality of educational experience and achievement in an online course cannot match that of a similar face-to-face class. Compounding these critiques, a number of studies in higher education have suggested that online courses, like their historic distance-education counterparts, tend to disproportionately enroll underserved students: if these already-vulnerable students are being attracted to a lower-quality educational experience online, then the proliferation of these courses might constitute an educational trap, exacerbating achievement gaps and providing barriers to persistence and success11,12.

The efficacy of online versus face-to-face courses seems ripe for an evidence-based study, yet high quality pseudo-experiments that compare the efficacies remain elusive. For example, The U.S. Department of Education in 2010 conducted a meta-analysis of 28 studies comparing online versus face-to-face learning in post-secondary education settings and concluded, “When used by itself, online learning appears to be as effective as conventional classroom instruction, but not more so”13. A re-evaluation of this meta-analysis, however, found only four of these studies used an appropriate experimental design and examined semester-length college courses: in three of the studies, the students in the online versions of a course had poorer outcomes than those in the face-to-face versions, whereas in the fourth study, the students in the two versions had roughly similar outcomes14.

More recently, several large-scale studies of college students in the United States determined that student outcomes—both persistence through the end of the course and final grades—were substantially poorer for online courses than for face-to-face courses15,16,17,18,19,20,21,22,23,24. These studies, however, were based on comparisons of courses with either different subject matter, those taught by different instructors, or those having relatively small numbers of students. Because many of these studies are based on dissimilar courses, they have had no opportunity to isolate students’ enrollment decisions to a simple choice between an online and a face-to-face version, nor provide appropriate analysis to account for the potential effects of underserved groups’ preference for one format over the other.

It follows that prior pseudo-experimental studies have also been unable to examine the critical concern that underlies all comparisons of online and face-to-face courses: if a tradeoff does exist between a face-to-face course’s baseline educational outcomes and an online course’s extended accessibility, is the decrease in learning outcomes worth the attendant increase in accessibility? These tradeoffs have been imbued with new urgency because of the COVID-19 pandemic during which universities and students seek to make difficult decisions about how to ensure safe course access while optimizing learning outcomes during the disruption of unfettered public life.

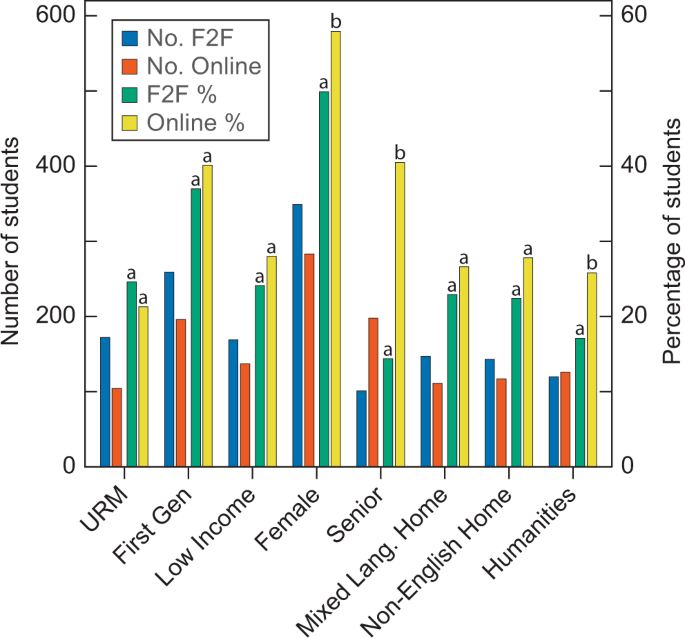

In this study, we seek to dissect student choice, student outcomes, and the tradeoffs between online and face-to-face courses at a large research university, through a post-hoc pseudo-experiment. We analyzed student performance versus their attributes for 1790 undergraduates of the University of California at Davis (a public research university) who enrolled in either an online or face-to-face version of the introductory course about climate change (for a syllabus of the course see Table 1). Each demographic group had more than 100 students enrolled in the online and face-to-face versions (Fig. 1). Each year, both versions of the course were taught by a single instructor, thus, minimizing major confounding variables such as instructor bias, course design, content differences, and other aspects that might influence student choices and outcomes. Before the COVID-19 pandemic, we offered both versions of the course during eight Winter quarters and offered only the online version during six Spring quarters. In Winter and Spring quarters 2021, during the pandemic, we offered the course only online. For two concurrent course offerings in Winter 2019—one face-to-face and one online—and for COVID-19 pandemic-induced online course offerings in Winter 2021 and Spring 2021, we surveyed the students about their past experiences with online learning and how these experiences influenced their choice between the online and face-to-face versions of the course.

“No. F2F” and “No. Online” are the numbers of students who were underrepresented minorities (URM) (African Americans, American Indian/Alaska Native, Chicanx/Latinx including Puerto Rican, and Pacific Islander including Native Hawaiian), first generation college student (First Gen), student with an annual family income of less than $80,000 (Low Income); student in their last year of college (Senior); student majoring in a humanities discipline (Humanities); “F2F %” and “Online %” are the percentages of students with a trait. Different letters above the bars indicate that % of students with a trait differed significantly (P < 0.05) between the F2F and online versions.

All elements of the course are available for free at https://www.climatechangecourse.org/, including a free multi-media textbook at https://indd.adobe.com/view/7eafc24d-9151-4493-85d2-cb3f2e5a2a51 that is updated regularly. During the period from 2017 to 2021, the online textbook had 5000 new users per year, who each averaged at least 3 views and 10 min per view. Before 2017, a printed version of the textbook was available for purchase25.

Before the COVID-19 pandemic (2013 through 2020), we taught both the online and face-to-face versions of the course concurrently during Winter quarters and only the online version during most Spring quarters. During the pandemic in Winter and Spring quarters 2021, we taught only the online version of the course. We found no significant difference in the grades for students enrolled in the online version before and during the pandemic (Table S1); therefore, in a subsequent analysis that compared the grades between the online and face-to-face versions, we merged the data for Winter and Spring 2021 with earlier data from the online version from 2013 to 2020 (Table 2).

Overall, students performed poorer in the online version. Humanities students, Underrepresented Minorities (i.e., African Americans, American Indian/Alaska Native, Chicanx/Latinx including Puerto Rican, and Pacific Islander including Native Hawaiian), and Seniors (i.e., students in their last year) received significantly lower grades than other students enrolled in either the online or face-to-face formats (Table 2). The factor that consistently had the largest influence on a student’s grade in this course was the student’s overall Grade Point Average (GPA) (Table 2, S1, S2, S3 and S5), demonstrating that students, who on average performed well in all their courses, performed well in this course. Students who spoke Mixed Languages at Home and those who were the First Generation to attend college received slightly higher grades than other students. Students from Low Income Families (i.e., annual family income of less than $80,000) received grades that did not differ significantly from other students.

One issue of concern is that students could choose which version they took in Winter quarters before the Covid pandemic: that is, assignment of a student to a treatment was nonrandom. Disentangling the influence of format selection on student performance from the influence of course format itself proved challenging. We took several approaches to account for the influence of format selection, and some of them indicated that students’ choice of course format was a major factor in their grades (see Supplementary Materials: Format Selection).

When students could choose between course formats (Winter quarters before the pandemic), student demographics and average grades differed between formats. Students self-identifying as Women, seniors, and humanities majors disproportionately chose to enroll in the online version of the course (Fig. 1). Students during these quarters performed poorer in the online version, and notably humanities students and underrepresented minorities who enrolled in either the online or face-to-face formats received significantly lower grades than other students (Table S2).

One approach for disentangling the influence of student choice of format from those of course format was to conduct a well-controlled regression comparing the outcomes of students who chose the face-to-face version in the Winter quarters before the pandemic with those of students who took the course when only the online version was offered (i.e., Spring quarters before the pandemic). Total course grade (out of 100), when regressed on course format and on controls for student demographic and academic characteristics, indicated that course format had no significant effect on student performance (Table S3).

Another approach for disentangling the influence of format selection from those of course format was to compare performance on different types of assessments. Weekly quizzes were administered online and based on the online textbook, and therefore depended entirely on online material, whereas the other assessments (weekly writing assignments, the midterm and final exams, and participation in weekly discussion sections) were probably enriched by face-to-face lectures and face-to-face discussion sections. During the Winter quarters before the pandemic, when students could choose between face-to-face and online versions, the scores on the quizzes did not differ significantly between the two formats, but scores on the other assessments were poorer for the student enrolled in the online version (Fig. 2). Moreover, students majoring in the humanities achieved lower scores on the quizzes, but course version influenced only the last quiz (one that focused on the sociology of climate change) (Table S4). These results indicate that the students who could choose the version of the course performed equally on material that was independent of course format but performed worse in the online version on material that depended on course format.

Different letters above the bars indicate that the grades on a type of assignment differed significantly (P < 0.01) between the students in the F2F and online versions.

We based 10% of the overall course grade on participation in discussion sections, which we evaluated primarily on attendance. Discussion sections in the online version were conducted synchronously via video conferencing with up to 15 students per section, whereas discussion sections in the face-to-face version were conducted on campus with up to 25 students per section. Students enrolled in the online version of the course participated in 5.5% fewer discussion sections than those in the face-to-face version (Fig. 2). This was similar for a comparison of versions offered in the same quarter (Winter) and a comparison when the versions were offered in different quarters (Table S5).

This study offers both methodological and topical insights. We identified differences in outcomes between course formats using well-controlled regression analyses of various subsets of the data. The performance of a student in this course depended most strongly on the overall Grade Point Average of the student (Table 2, S1, S2, S3, and S5), indicating that this course required proficiency in the same skills as other college courses.

The outcomes of the students who only had an option of the online format (i.e., Spring quarters before the pandemic) did not differ significantly from those of the students who selected the face-to-face version in Winter quarters before the pandemic (Table S3). Perhaps our most illuminating findings were that differences in outcomes between formats were significant for the writing assignments and exams, but not the quizzes. We hypothesized that because the quizzes were based entirely on online material, online students would not be disadvantaged. This proved to be the case (Fig. 2). Poorer participation through lower attendance in the online discussion sections (Fig. 2) might be responsible for the poorer performance of online students on the writing assignments and exams (Fig. 2). Future research should seek approaches to address this deficiency in online courses.

This study’s findings offer insight into the effects of COVID-19 on higher education. Our results show that online coursework incurs only a small outcome penalty for students when a choice between formats is offered (Table 2). COVID-19 itself caused no significant difference in outcomes (Table S1). Given these findings, we are cautiously optimistic that an online format before or during COVID-19 may not be substantially detrimental to student learning in courses similar to the one studied here, if adequate care is given to aligning course content and instruction between formats.

One should consider the limitations of these findings as well. These data derived from an introductory, lower-division course that is usually taken as an elective, and these findings may not generalize to more technical or advanced courses, or courses that include lab work or group projects. Additionally, our use of well-controlled regression analysis to test the research questions limited our ability to account for selection. We discuss selection effects further in the supplemental materials.

Despite the hardship caused by the COVID-19 pandemic, it offers unique opportunities for further research on online course work. Many students are now enrolled in online courses that were previously face-to-face, providing much more data to compare online versus face-to-face outcomes, without the obfuscation of course format selection. We suggest that future research leverage these data for a better understanding of the efficacy of online coursework.

Undergraduate students who chose the online version of an introductory course on climate change performed 2% worse (on a scale of 0–100) than those who chose the face-to-face version. The convenience of the online course—it required only one synchronous, online meeting per week versus three synchronous, on campus meetings per week—might be worth this small penalty. In particular, students who have to be away from campus classrooms for employment opportunities, family obligations, athletic events, year-abroad programs, or social distancing are well served by an online format.

Table 1 provides the syllabus for the course. During Winter quarters 2013 through 2015 and 2017 through 2020, the primary instructor (A. J. Bloom) taught both the online and face-to-face versions concurrently, whereas in Winter 2016, a second instructor (Dr. Margaret Swisher-Mantor) taught both versions. The primary instructor taught only the online version during Winter quarter 2021 and Spring quarters of 2013, 2014, 2015, 2017, 2018, and 2021.

All elements of the course are available for free at https://www.climatechangecourse.org/, including a free multi-media textbook at https://indd.adobe.com/view/7eafc24d-9151-4493-85d2-cb3f2e5a2a51 that is regularly updated. Before 2016, a printed version of the textbook was available for purchase (23). The course covered the (a) physical sciences (history of Earth’s climate, causes of change, and predictions), (b) biological consequences (direct effects of rising CO2, global warming, precipitation changes, and ocean acidification), (c) technological mitigation and adaptation, (transportation, electricity generation, buildings, and geoengineering), and (d) social sciences (economics, law, and social change). Lecture materials were available in 48 short (less than 15 min) videos or presented two times per week in live lectures of 50-minute duration that were streamed live over the internet or posted as videos on the course website on the same day. Students—be they enrolled in the online or face-to-face version of the course—had access to the lecture materials in all forms. Students had a weekly mandatory, 50-minute discussion section that met either synchronously online via video conferencing (Adobe Connect or Zoom) with up to 15 students per section with a choice of 8 different meeting times or face-to-face with up to 25 students per section with a choice of 4 different meeting times.

Assessments of the students included (a) weekly quizzes composed of 10 to 12 multiple choice questions drawn randomly from a pool of about 50 questions available as a practice quiz in the multi-media textbook, (b) participation in the weekly discussion sections based mostly on attendance, (c) weekly writing assignments that alternate between exercises and essays, (d) a proctored midterm exam with 25 multiple choice questions including those from the same question pools as the weekly quizzes and a few from the lectures and one essay question in which a student explained the greenhouse effect, and (e) a proctored final exam with 50 multiple choice questions including those from the same question pools as the weekly quizzes and a few from the lectures and one essay question in which a student explained what they would do, if anything, about climate change and why they would choose this course of action.

We predicted that performance on quizzes would not differ between students in the online and face-to-face versions of the course because the quizzes are based entirely on the textbook, whereas performance on the other assessments would be more dependent on course format because these rely more on information in lectures and discussion sections.

We fit the three models using an ordinary least squares linear regression (function lm) implemented in R version 4.0.3 (R Core Team, 2013):

Gi = b0 + b1(Formati or Covidi) + ei,

Gi = b0 + b1(Formati or Covidi) + b2MixedLangi + b3NoneEngLangi + b4Malei + b5Seniori + b6Humanitiesi + ei,

Gi = b0 + b1(Formati or Covidi) + b2MixedLangi + b3NoneEngLangi + b4Malei + b5Seniori + b6Humanitiesi + b7GPAi + b8URMi + b9LowIncomei + b10FirstGeni + ei,

where Gi is the grade for student i on a 0 to 100 scale. In these models, the variable of interest is Format (a binary indicator coded as 1 for the online format and 0 for the face-to-face format) or Covid (a binary indicator coded as 1 for the course offerings in 2021 and 0 for the previous years). The coefficient b1 represents the marginal effect of the online course format or pandemic on grade. Model 1 yields a b1 value that represents the unconditional difference in mean student grade between the online and face-to-face versions of the course or the difference in mean student grade during the pandemic and before the pandemic. This value is an offset from b0, which represents the mean student grade in the face-to-face format or before the pandemic. Models 2 and 3 yield b1 values that represent the difference in mean student grade between the online and face-to-face versions of the course or the difference in mean student grade during and before the pandemic after accounting for demographic makeups and for previous academic achievement of the students in each format or each time period. The function lm calculated (a) ordinary least squares estimates of the coefficients (for COVID, Online, Mixed Lang. Home, Non-English Home, Male, Senior, Humanities, URM, Low Income, and First Generation designated to be 0 or 1 and for GPA which varied between 0 and 4) with standard errors, (b) t values for the Wald test of the hypothesis H0:βi = 0, and (c) the associated P values. A P ≤ 0.05 was considered significant.

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

All materials for the course including the multi-media textbook are publicly available for free. Student grades and demographic information in the United States are confidential according to the FERPA (Federal Educational Rights and Privacy Act; https://www2.ed.gov/policy/gen/guid/fpco/ferpa/index.html). One can provide such data to the public only if aggregated for large groups (e.g., > 10 students). The authors judged that a dataset for large groups would duplicate the information already presented in Table 2, and S1–S7.

Bloom, A. J. Climate Change: Causes, Consequences, and Solutions, https://climatechangecourse.org/ (2022).

Simpson, M. & Anderson, B. History and heritage in open, flexible and distance education. J. Open Flexible Distance Learn. 16, 1–10 (2012).

Google Scholar

Bettinger, E. & Loeb, S. Promises and pitfalls of online education. Evidence Speaks Reports 2, 1–4 (2017).

Google Scholar

Protopsaltis, S. & Baum, S. Does online education live up to its promise? A look at the evidence and implications for federal policy. Center for Educational Policy Evaluation (2019).

Allen, I. E. & Seaman, J. Changing Course: Ten Years of Tracking Online Education in the United States. (Babson Survey Research Group and Quahog Research Group, LLC, 2013).

De Brey, C., Snyder, T. D., Zhang, A. & Dillow, S. A. Digest of Education Statistics 2019 (NCES 2021-009). 55th edn, (National Center for Education Statistics, Institute of Education Sciences, U.S. Department of Education, 2021).

Crews, T. B., Wilkinson, K. & Neill, J. K. Principles for good practice in undergraduate education: Effective online course design to assist students’ success. J. Online Learn. Teach. 11, 87–103 (2015).

Google Scholar

Grant, M. R. & Thornton, H. R. Best practices in undergraduate adult-centered online learning: Mechanisms for course design and delivery. J. Online Learn. Teach. 3, 346–356 (2007).

Google Scholar

McGee, P., Windes, D. & Torres, M. Experienced online instructors: beliefs and preferred supports regarding online teaching. J. Comput. Higher Educ. 29, 331–352 (2017).

Article Google Scholar

Greenhow, C. & Galvin, S. Teaching with social media: evidence-based strategies for making remote higher education less remote. Inf. Learn. Sci. 121, 513–524 (2020).

Kaupp, R. Online penalty: The impact of online instruction on the Latino-White achievement gap. Journal of Applied Research in the Community College 19, 3–11 (2012).

Google Scholar

Kizilcec, R. F. & Halawa, S. in Proceedings of the Second (2015) ACM Conference on Learning@ Scale. 57-66.

Means, B., Toyama, Y., Murphy, R., Bakia, M. & Jones, K. Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies, http://www2.ed.gov/rschstat/eval/tech/evidence-based-practices/finalreport.pdf (2010).

Jaggars, S. & Bailey, T. Effectiveness of fully online courses for college students: Response to a department of education meta-analysis. (Community College Research Center, New York, NY, 2010. http://files.eric.ed.gov/fulltext/ED512274.pdf

Google Scholar

Xu, D. & Jaggars, S. S. Performance gaps between online and face-to-face courses: Differences across types of students and academic subject areas. J. Higher Educ. 85, 633–659 (2014).

Article Google Scholar

Amro, H. J., Mundy, M.-A. & Kupczynski, L. The effects of age and gender on student achievement in face-to-face and online college algebra classes, https://files.eric.ed.gov/fulltext/EJ1056178.pdf (2015).

Johnson, H. P., Mejia, M. C. & Cook, K. Successful online courses in California’s community colleges. 24 (Public Policy Institute, 2015).

Wladis, C., Conway, K. & Hachey, A. C. Using course-level factors as predictors of online course outcomes: a multi-level analysis at a US urban community college. Stud. Higher Educ. 42, 184–200 (2017).

Article Google Scholar

Bettinger, E. P., Fox, L., Loeb, S. & Taylor, E. S. Virtual classrooms: How online college courses affect student success. Am. Econ. Rev. 107, 2855–2875 (2017).

Article Google Scholar

Faulconer, E. K., Griffith, J., Wood, B., Acharyya, S. & Roberts, D. A comparison of online, video synchronous, and traditional learning modes for an introductory undergraduate physics course. J. Sci. Educ. Technol. 27, 404–411 (2018).

Article Google Scholar

Faulconer, E. K., Griffith, J. C., Wood, B. L., Acharyya, S. & Roberts, D. L. A comparison of online and traditional chemistry lecture and lab. Chem. Educ. Res. Pract. 19, 392–397 (2018).

CAS Article Google Scholar

Hart, C. M. D., Friedmann, E. & Hill, M. Online course-taking and student outcomes in California community colleges. Educ. Finance Policy 13, 42–71 (2018).

Article Google Scholar

Spencer, D. & Temple, T. Examining students’ online course perceptions and comparing student performance outcomes in online and face-to-face classrooms. Online Learn. 25, 233–261 (2021).

Article Google Scholar

Mead, C. et al. Online biology degree program broadens access for women, first-generation to college, and low-income students, but grade disparities remain. PLOS ONE 15, e0243916 (2020).

CAS Article Google Scholar

Bloom, A. J. Global Climate Change: Convergence of Disciplines. (Sinauer Assoc., 2010).

Download references

We thank Ellen Osmundson, Richard Plant, Paul Kasemsap, Anna Knapp, Bill Breidenbach, Xiaxiao Shi, Dana Rae Lawrence, Josh Claxton, and Jenna O’Kelley for their comments on the manuscript. This research was funded in part by UC Office of the President Innovative Learning Technology Initiative 19921, NSF grant DUE-09-50396 (AJB), NSF grant IOS-16-55810 (AJB), NSF grant CHE-19- 04310 (AJB), USDA-IWYP-16-06702 (AJB), and John B. Orr Endowment (AJB).

Stephanie Pulford

Present address: Tempo Automation, 2460 Alameda Street, San Francisco, CA, 94103, USA

Center for Educational Effectiveness, University of California, Davis, CA, USA

Sattik Ghosh & Stephanie Pulford

School of Education, University of California, Davis, CA, USA

Sattik Ghosh

Department of Plant Sciences, University of California, Davis, CA, USA

Arnold J. Bloom

You can also search for this author in PubMed Google Scholar

You can also search for this author in PubMed Google Scholar

You can also search for this author in PubMed Google Scholar

Conceptualization: A.J.B., S.G., S.P. Methodology: A.J.B., S.G., S.P. Investigation: A.J.B., S.G. Funding acquisition: A.J.B. Supervision: A.J.B. Writing—original draft: A.J.B. Writing—review & editing: A.J.B., S.G., S.P.

Correspondence to Arnold J. Bloom.

The authors declare no competing interests.

Communications Earth & Environment thanks Aldo Bazan-Ramirez and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editors: Heike Langenberg and Clare Davis.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

Reprints and Permissions

Ghosh, S., Pulford, S. & Bloom, A.J. Remote learning slightly decreased student performance in an introductory undergraduate course on climate change. Commun Earth Environ 3, 177 (2022). https://doi.org/10.1038/s43247-022-00506-6

Download citation

Received: 24 March 2022

Accepted: 25 July 2022

Published: 06 August 2022

DOI: https://doi.org/10.1038/s43247-022-00506-6

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Advertisement

Advanced search

© 2022 Springer Nature Limited

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.